Scraping a website for data can be quite cumbersome without a bit of coding knowledge. So specialized have some developers become at this, that there are now even web services where customers input the website they’d like to scrape, and the service returns the data as a structured spreadsheet. Since I’ve learned the basics of how to web scrape, I’ve become quite good at it, but it still involves painstaking and monotonous steps. Enter AI tools such as ChatGPT. The steps outlined below cover how to use it to generate a working Python web scraper that can grab content from a website, how to trouble-shoot the code if it fails, and how to store the scraped data neatly.

Getting Started with ChatGPT

Before actually scraping anything, you will need to spend a bit of time setting up your environment and working out how to use your software tools. In this section, I will take you through the basics of ChatGPT and how to begin your scraping project.

Setting Up Your Environment

First, make sure you have the Python programming language and an integrated development environment called Jupyter Notebook installed on your computer, as well as the second-to-last version of Beautiful Soup (for parsing HTML), and the Requests library (for making HTTP requests).

Run the following command to install the required libraries:

pip install requests beautifulsoup4Once your environment is ready, you can start using ChatGPT to generate the initial code.

Generating Code with ChatGPT

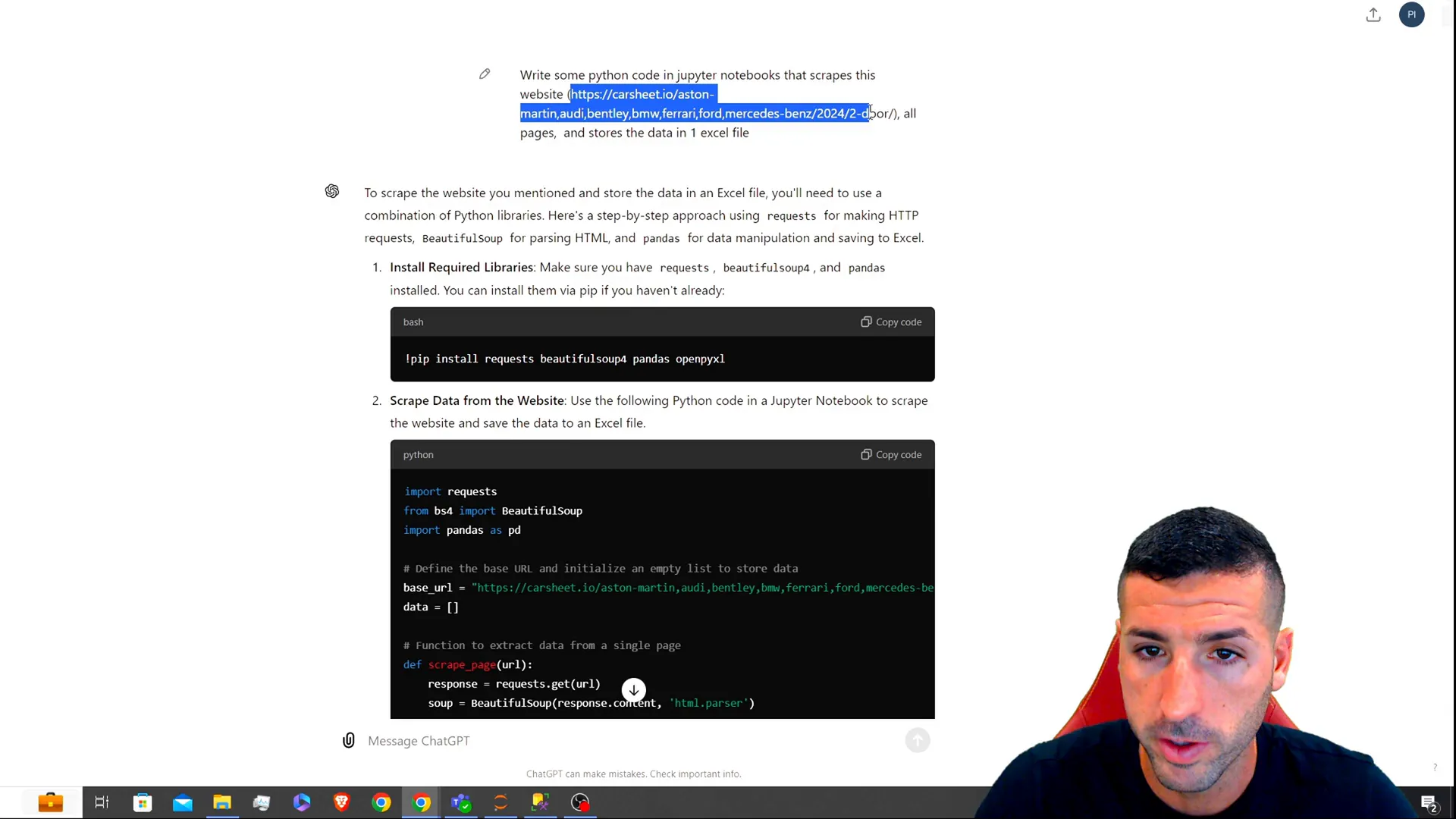

Once you’re up and running, you can ask ChatGPT to help create the Python code you need to scrape the site: To do this, specify further instructions to the AI about the data you want it to harvest.

Creating Your First Prompt

The clearer and more descriptive your prompt, the better code ChatGPT will generate. For instance: ‘Scrape this website and bring back a list of models of cars’, might become ‘Paraphrase the input into human-sounding text while retaining citations and quotes.’

Write a Python script using Beautiful Soup to scrape car models from [URL]. I need the following data: make, model, year, and price. Please save the data in a CSV file.

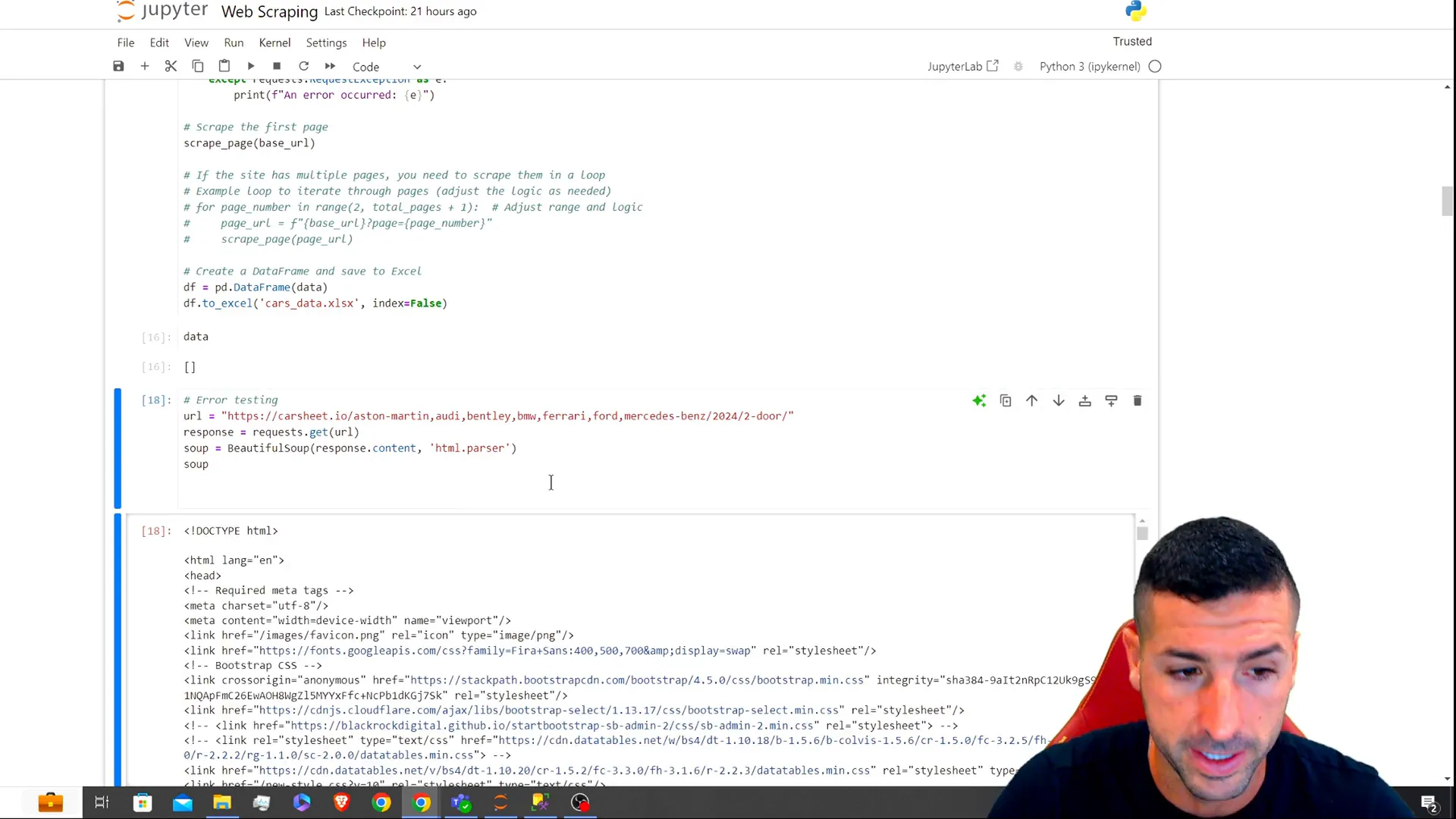

Troubleshooting Common Issues

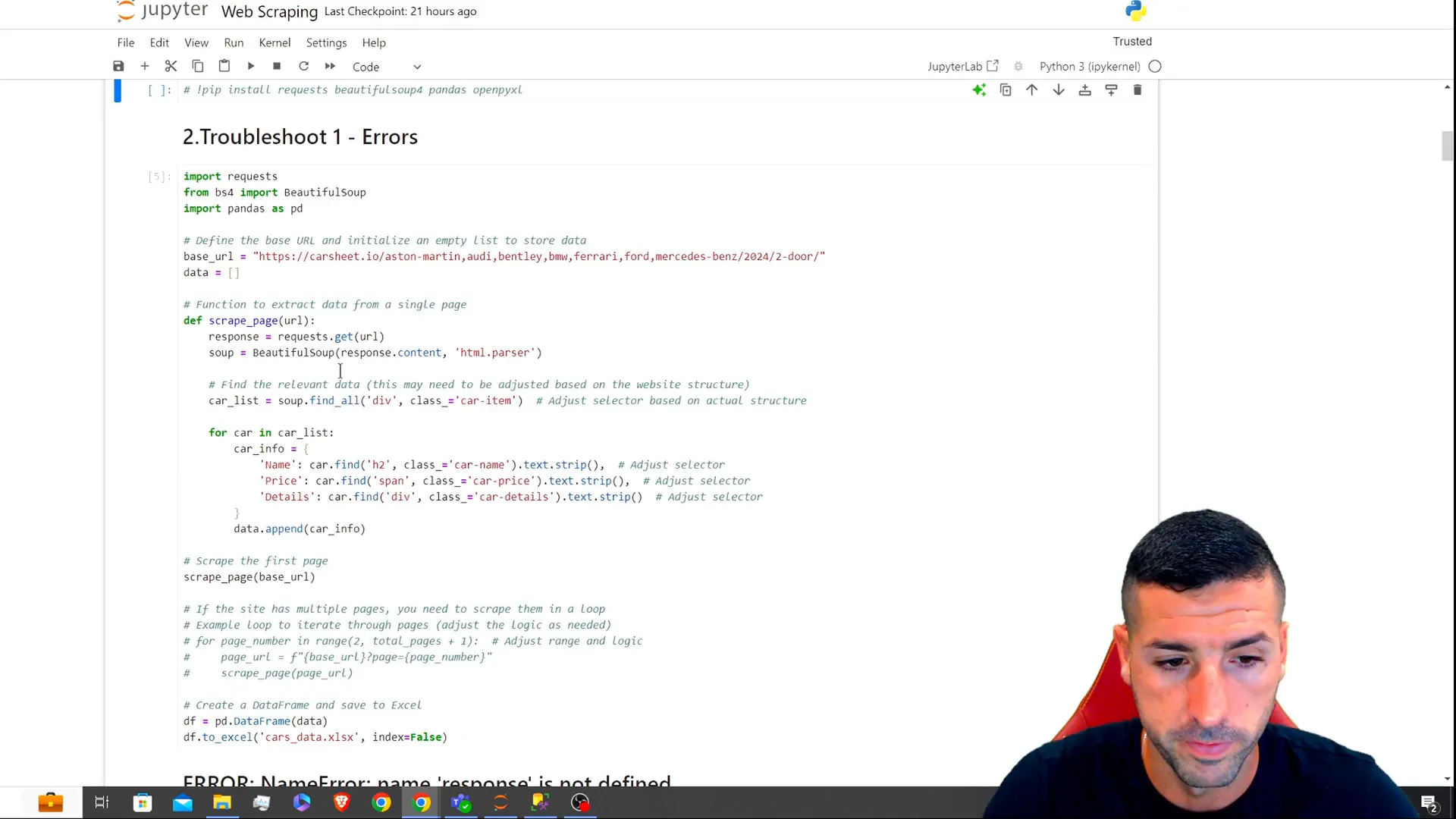

Errors can still happen after a good prompt: When the code executes, something unexpected happens. Here, we’ll focus on debugging that scenario.

Identifying Errors

If you run this generated code you will probably get an error. You must find this error and try to identify its type and cause. Maybe for example you have a bad request or your URL has an incorrect structure.

In case something goes wrong, copy an error message and paste it back into the text area of ChatGPT, where you’ll be prompted to ‘Paraphrase the input into human-sounding text while retaining citations and quotes.’

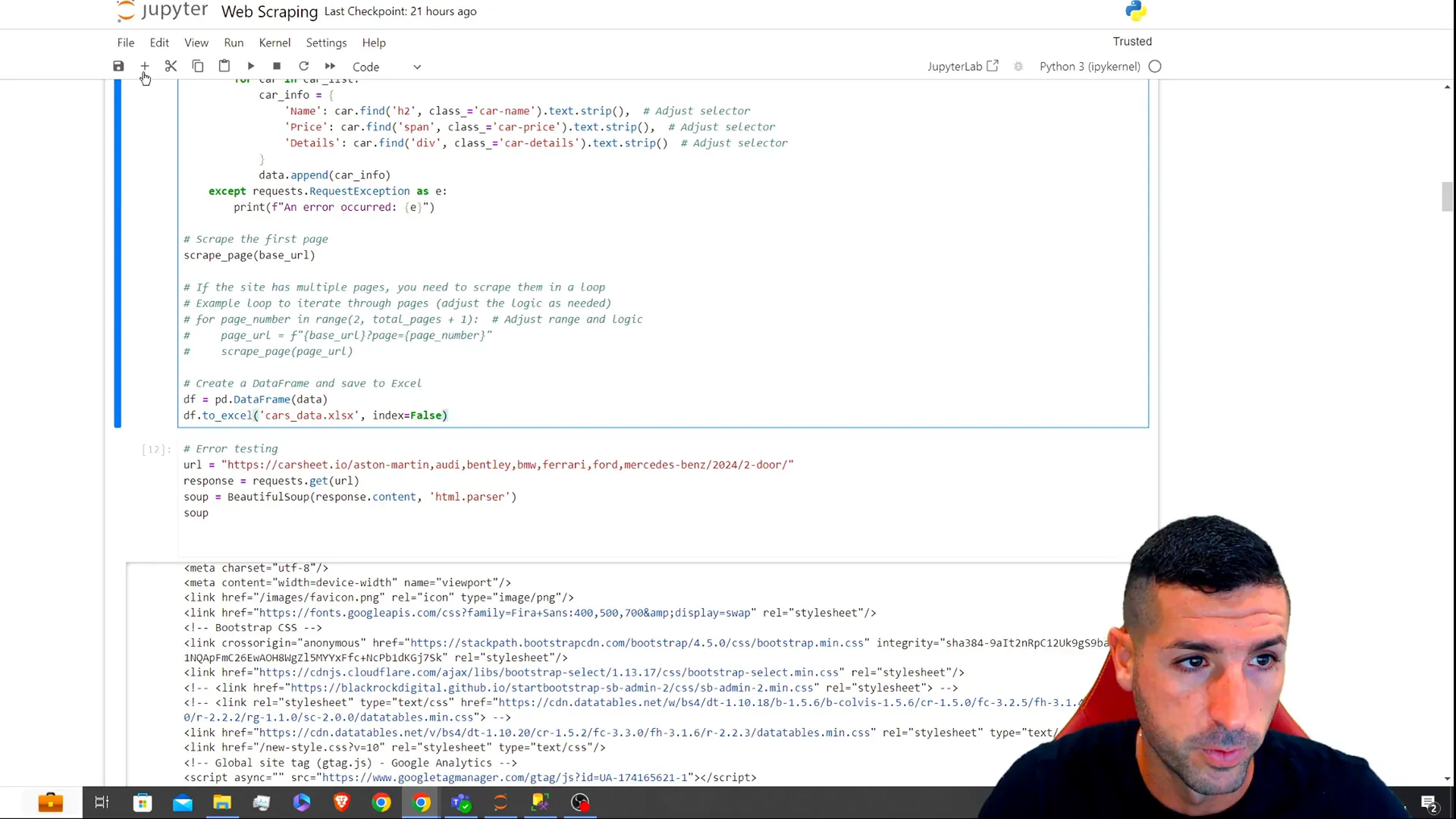

Testing the Code

After that, just run your code again to see if it works. If you’re greeted by an empty data output, that might be a clue that you aren’t scraping valid HTML.

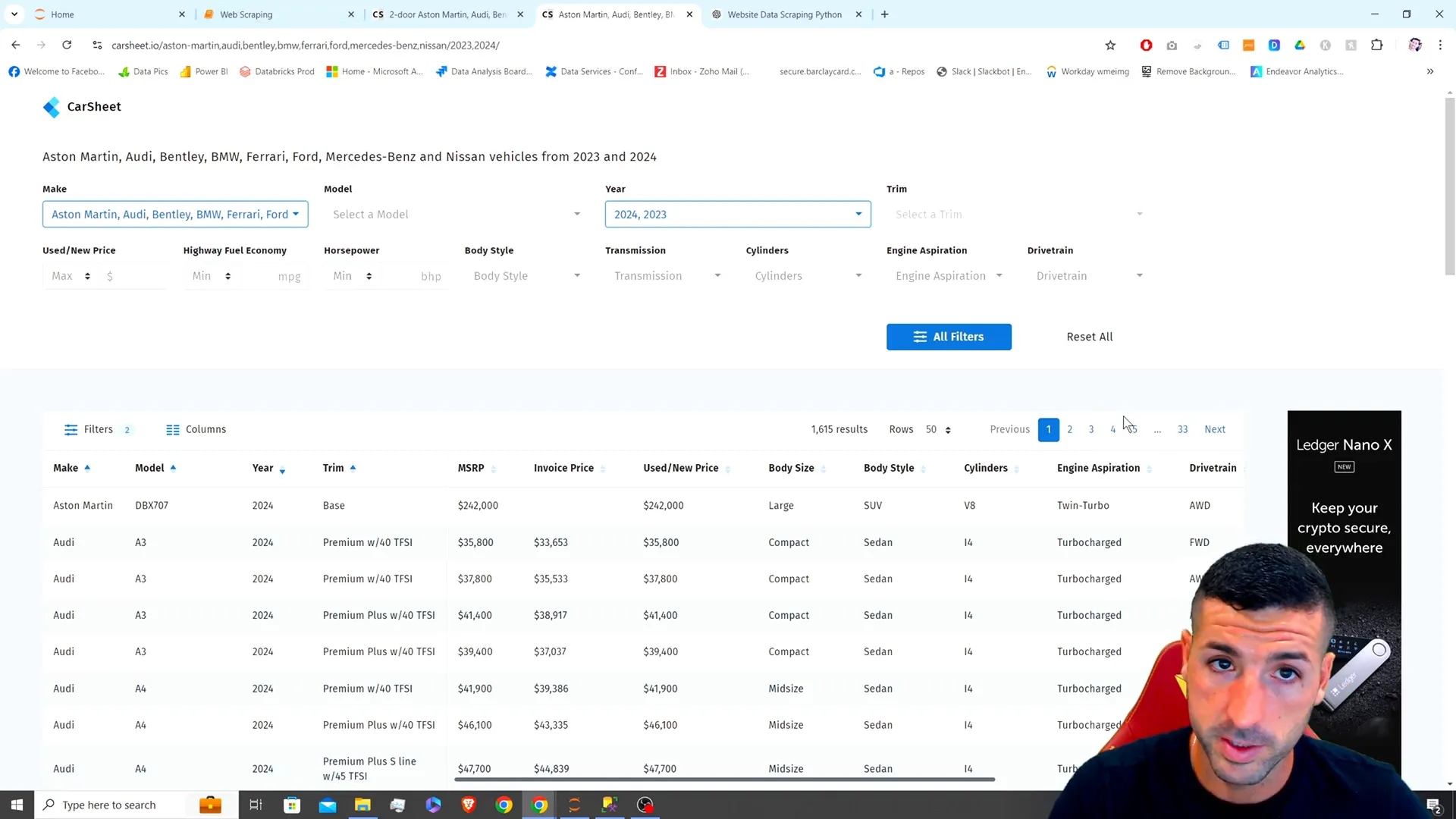

Understanding HTML Structure for Data Extraction

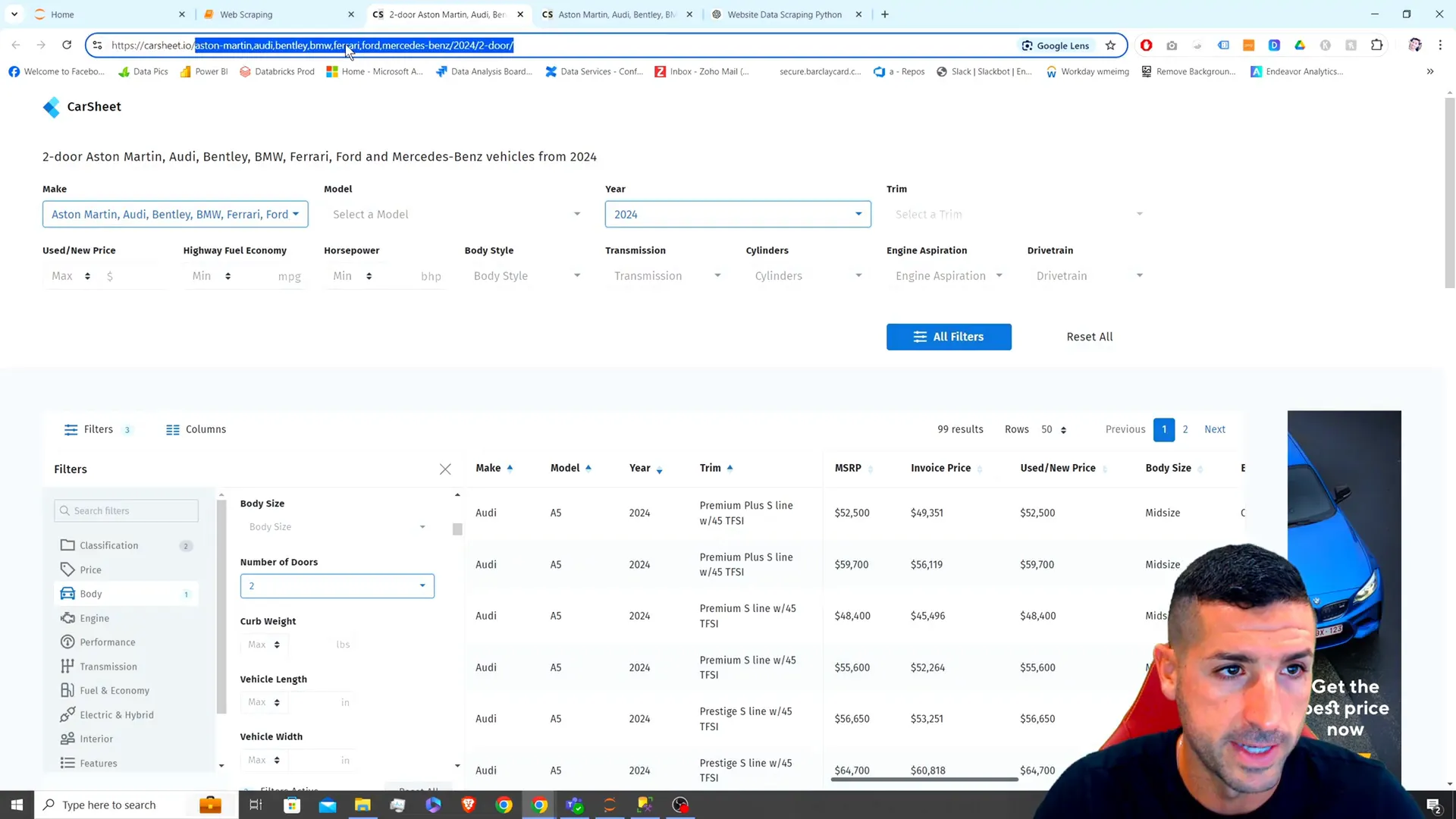

Before you can get scraping, you need to know how the HTML on the page you want to scrape is structured: this part of the tutorial explains how to identify the elements you want to extract.

Using Developer Tools

For a quick primer on HTML and browsers, you can check out the source code of a webpage by right-clicking anywhere on your browser and selecting Inspect. Then select the tab labelled ‘Elements’. This will allow you to see the HTML structure of a page, and find what you wish to scrape.

For example, if you wanted to find the table showing makes of cars, go to the HTML code and see which tags and classes are attached to that table.

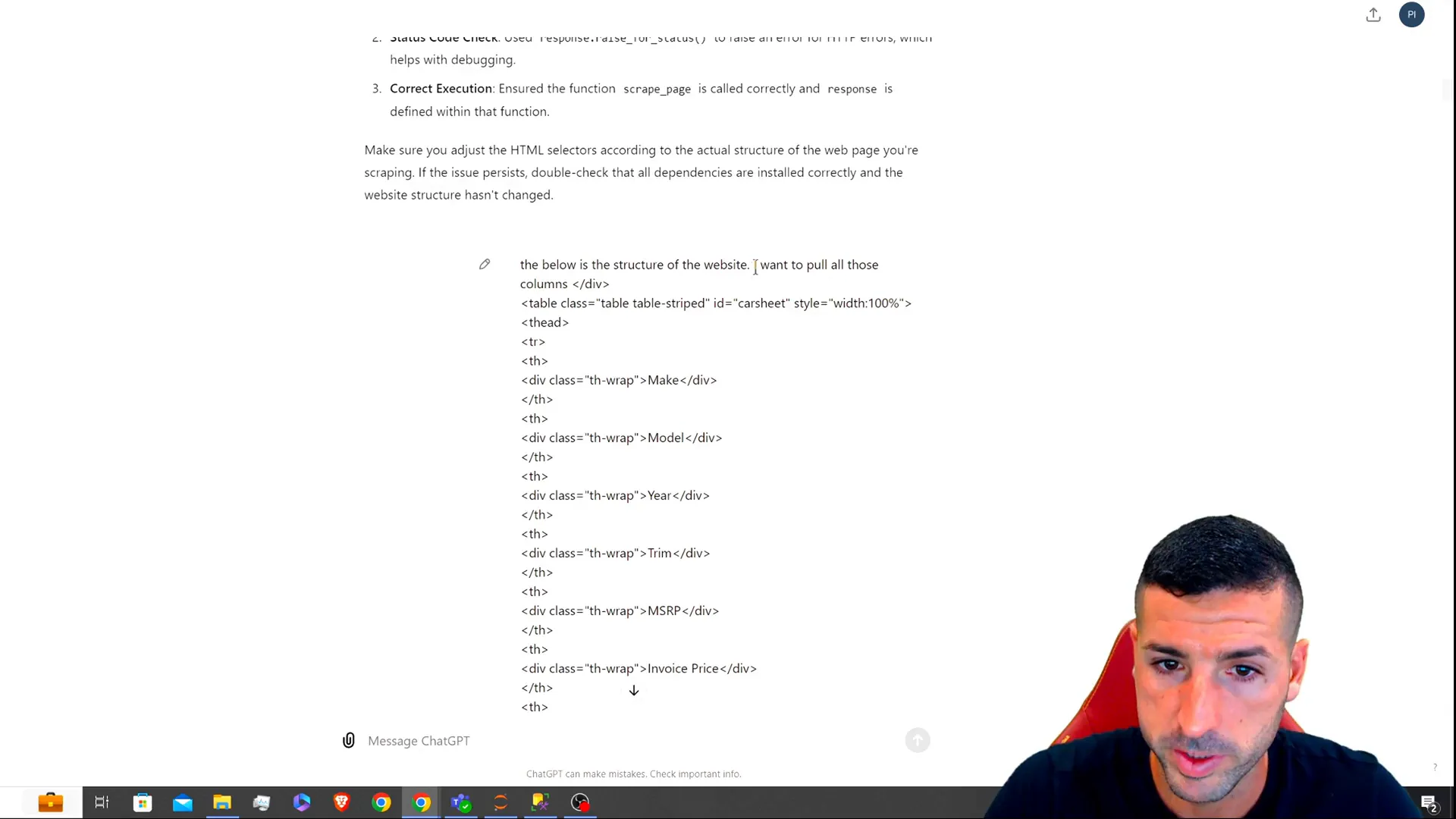

Refining the Code with ChatGPT

After a few minutes of thinking about the HTML structure, you might be able to polish up the code from ChatGPT and extract the data correctly.

Providing Context to ChatGPT

Once you have the HTML structured, refine the code by telling ChatGPT what your specific HTML structure is. For example, the HTML you have and the names of the columns you want to pull, and the HTML tags they correspond to.

Here is the structure of the table I want to scrape:

Make:

Model:

Year:

Price:

ChatGPT will then generate an updated code snippet based on the new information you provided.

Handling Multiple Pages

Some websites paginate their data, that is, they spread information across several pages, so if you don’t perform scraping across multiple pages of data, you might miss important information. This section covers how to enhance the functionality of your code to handle pagination better.

Looping Through Pages

To scrape a few dozens of pages, you can modify the code to include a loop that goes through the page numbers one by one:

for page in range(1, 4): # Adjust the range according to the number of pages

# Code to scrape data from each page

Make sure to adjust the URL structure to reflect the pagination format used by the website.

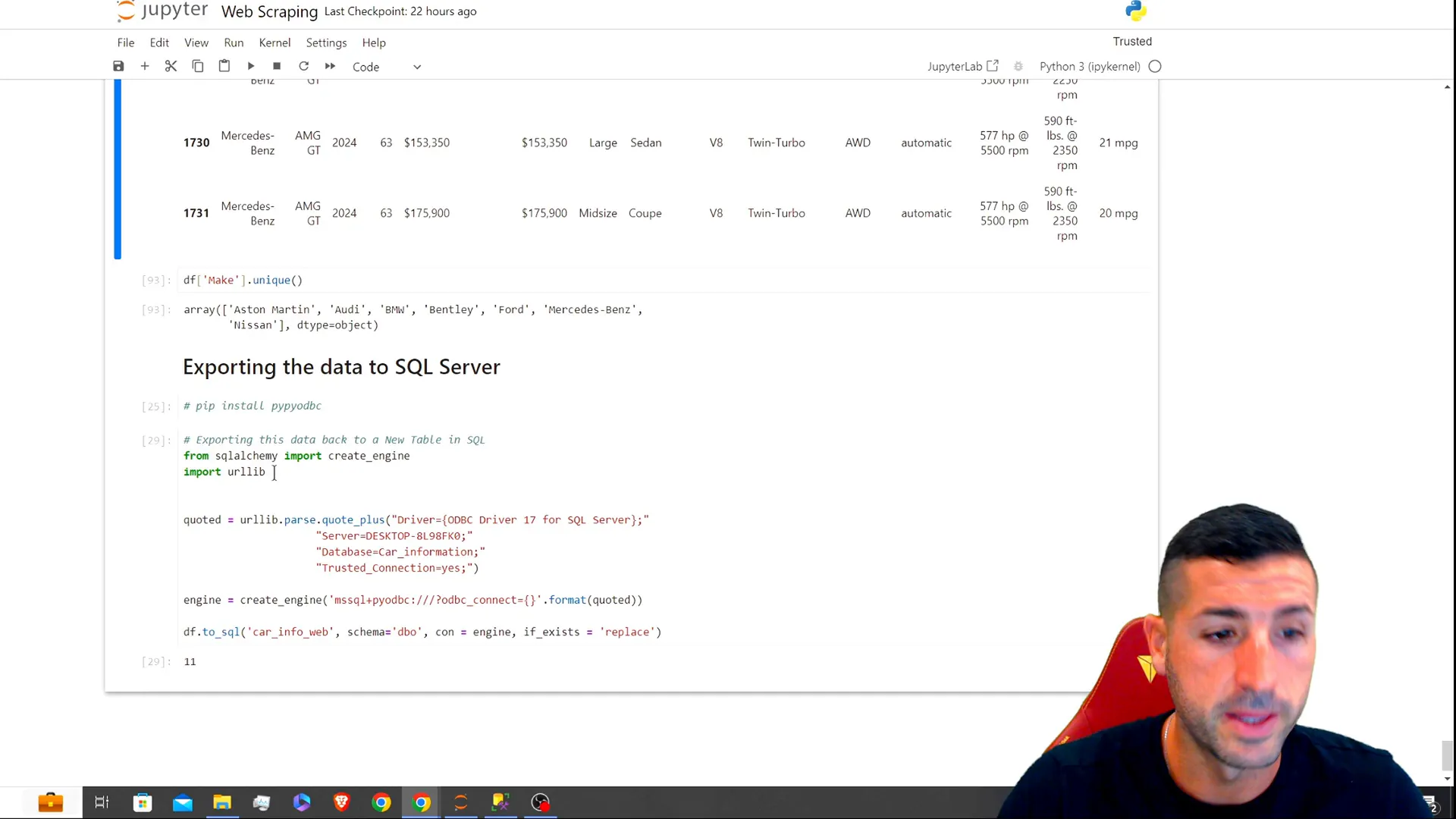

Exporting Data to Excel and SQL Database

Once you have rinsed the data off your screen, you might want to preserve it for future analysis. In this part, we’ll provide you with instructions on saving the data to both Excel files and SQL databases.

Saving Data in CSV Format

To save your scraped data in a CSV file, you can use the following code snippet:

import csv

with open('car_data.csv', mode='w', newline='') as file:

writer = csv.writer(file)

writer.writerow(['Make', 'Model', 'Year', 'Price']) # Write headers

writer.writerows(car_data) # car_data is the list of scraped data

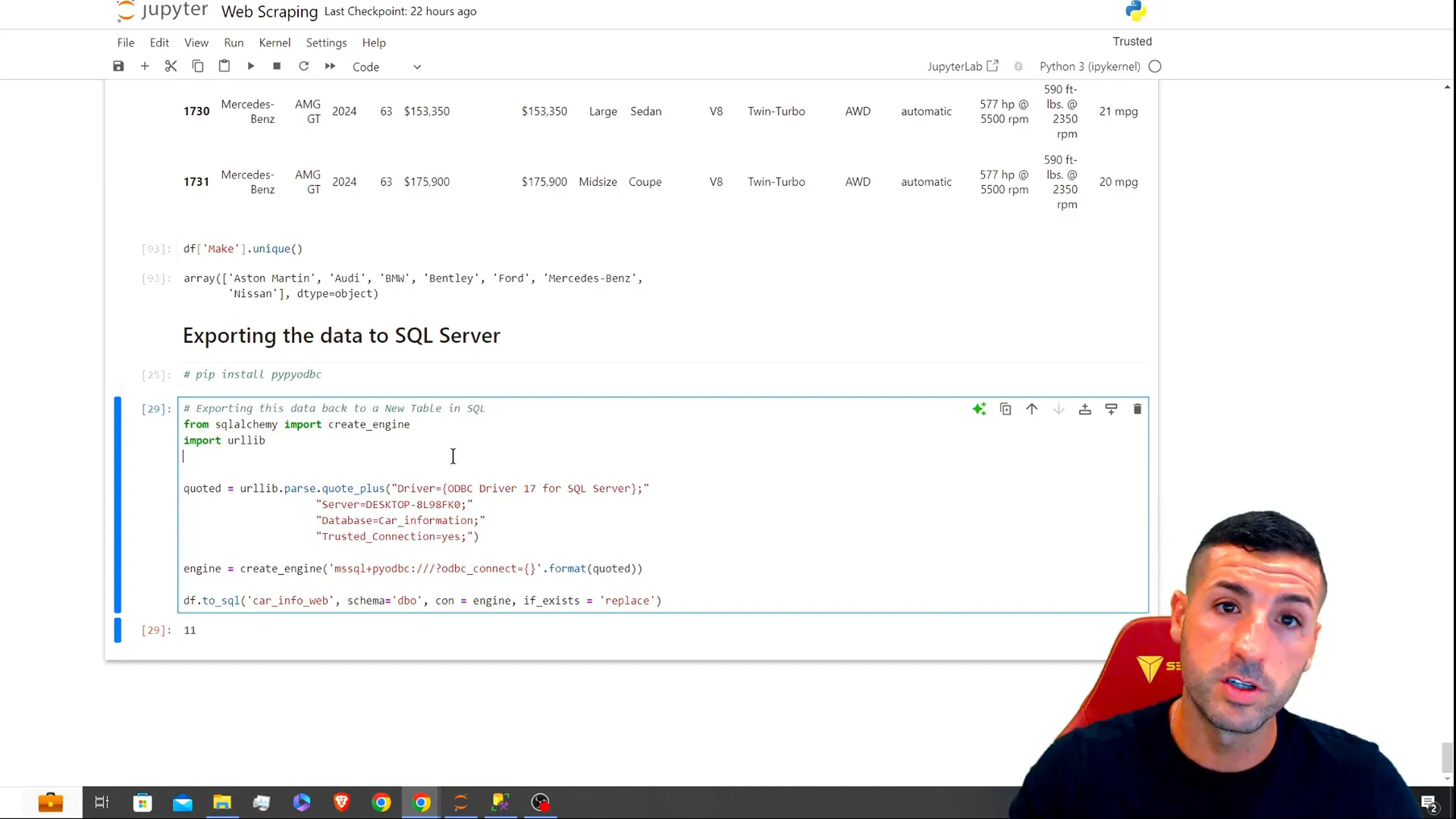

Storing Data in SQL Server

If you wish to persist the data in a SQL database, use a package such as pyodbc to build a connection and execute the SQL to load data into your database.

import pyodbc

# Establish connection to SQL Server

conn = pyodbc.connect('DRIVER={SQL Server};SERVER=your_server;DATABASE=your_db;UID=user;PWD=password')

cursor = conn.cursor()

# Insert data into the database

for row in car_data:

cursor.execute("INSERT INTO Cars (Make, Model, Year, Price) VALUES (?, ?, ?, ?)", row)

conn.commit()

conn.close()

Conclusion

With Pyhton, writing your scraping scripts will be much easier in using ChatGPT as the stepping stone. Following this guide, you can craft your own scraping scripts, troubleshoot the errors frequently encountered, and save the retrieved data for further analysis. ChatGPT is a great assistant to enhance your coding efficiency, but it is advised to have basic knowledge of Python and web scraping to achieve optimal results.

That was useful? If so, subscribe to my channel for more tutorials on data science and web scraping.